I Simulated 100 Men vs a Gorilla 1M Times So You Don’t Have To

How It Began

It started, as these things often do, with a meme.

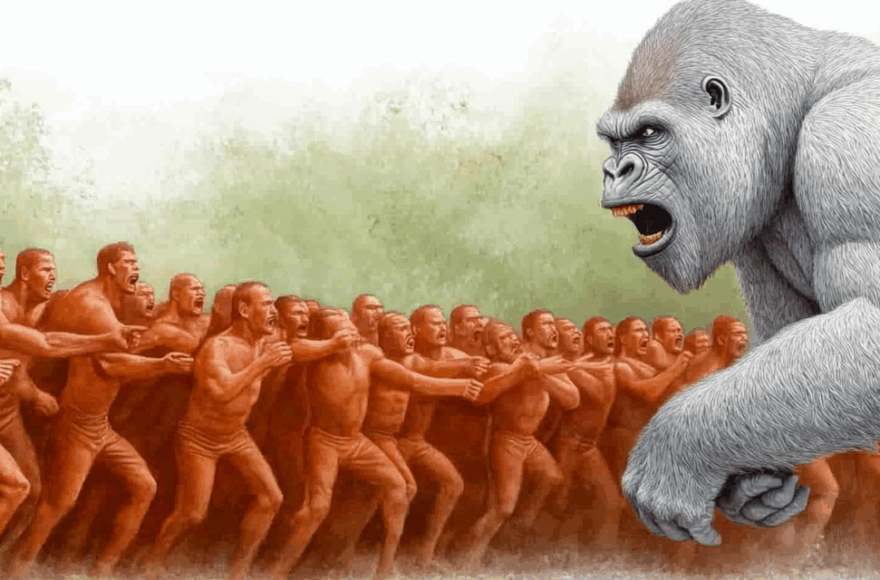

A hypothetical question — “Could 100 average adult men take down a silverback gorilla in hand-to-hand combat?” — started bouncing around social media years ago. It picked up traction on Reddit, Twitter, and eventually TikTok, gaining the kind of traction that only absurdly specific internet hypotheticals can. It’s a debate that’s equal parts comedy, testosterone, and misplaced confidence — but also, strangely, a fascinating design challenge.

So I decided to take it seriously. Not emotionally. Not morally. Just technically.

Over the course of a few days, I built a full-scale battle simulator in Rust. I modeled both the human and gorilla combatants with baseline stats inspired by biological research, military doctrine, RPG mechanics, and credible sources. I designed a simple grid-based combat arena and wrote a rules engine to simulate encounters round by round. Then I ran it a million times.

But I wasn’t here to settle the argument. I was interested in what happens when you take the premise seriously — not just as a joke, but as a design problem. What does it take to model an absurd idea with technical rigor? What can AI contribute when you treat meme culture like a simulation brief? And what does it say about us that we even want to know who would win? Those were the real questions I set out to explore.

The Origin of the Meme

The “100 men vs. a gorilla” scenario traces its roots to internet forums like Reddit and Twitter in the early 2010s, where hypothetical combat matchups became a recurring format for comedy and casual debate. The premise likely gained traction due to its absurdity — a kind of irresistible mix of overconfidence, imagined strategy, and primal spectacle.

Its popularity resurged on TikTok in 2023, with creators posting dramatic reenactments, faux strategy breakdowns, and mock-serious discussions about how the fight might unfold. Some users leaned into tactics — claiming the key would be to swarm and exhaust the gorilla — while others insisted no number of unarmed men could match its strength and agility. As with most memes, the debate became less about answers and more about participation.

The meme even inspired tangential discussions in mainstream media and comedy, with echoes of the format showing up in podcast debates, YouTube reaction videos, and sketch comedy. But the humor always masked something deeper: a kind of cultural fascination with limits — of the human body, of nature, of violence, and of collective belief.

That blend of absurdity and intensity is part of what made this simulation such an interesting experiment. It’s not just about whether the men win or lose — it’s about why we even want to know.

These kinds of hypotheticals thrive online for a reason. They’re frictionless entry points for collective performance — easy to understand, easy to debate, and impossible to resolve. They bypass expertise and invite improvisation, drawing in everyone from gym bros to game theorists. The format rewards escalation: the more outlandish the claim or confident the strategy, the more engagement it draws. Argument becomes entertainment. Participation becomes performance.

And there’s a particular flavor to this one. It’s not a coincidence that this scenario captured the imaginations of mostly young men online. There’s something in it about unproven toughness, imagined valor, and the fantasy of coordinated domination. It’s a kind of anonymous masculinity ritual — ironic, maybe, but not entirely unserious. Why this fight? Why 100 men? Why unarmed? These aren’t just details — they’re signals of how certain online spaces channel competitiveness, humor, and insecurity into hypotheticals that feel like hero quests.

We’ll explore that more later. For now, it’s enough to recognize that this meme didn’t just persist because it was funny — it persisted because it said something unspoken, and everyone felt it.

Designing a Combat Simulation

Framing the Problem

Before you can simulate a fight between 100 men and a gorilla, you have to make a decision: are you trying to answer the question, or explore what it means to ask it?

From the beginning, this project was about taking the meme seriously — not to prove or disprove a point, but to model what happens when you treat absurdity with structure. That meant establishing parameters, enforcing constraints, and building a simulation engine that could support consistency over chaos.

The core challenge was obvious: how do you model a fight that would never happen in real life — ethically, legally, or logistically — in a way that produces useful or interesting results?

You can’t just hand-wave it with vibes and bravado (though there’s plenty of that online already). You need mechanics. You need stats. You need repeatability.

And maybe most importantly, you need to resist the temptation to tip the scale. If you’re trying to make a point, you can bend the rules. If you’re trying to test a system, you can’t. This wasn’t a fanfic or a fantasy. It was a model — imperfect, sure, but built to run at scale with consistent logic.

Key Constraints

No Weapons

The first and most important constraint: no weapons of any kind.

This decision wasn’t arbitrary. The internet versions of the meme almost universally frame the scenario as unarmed men versus one gorilla — a test of raw physicality rather than tactics, tools, or technology. Including weapons would have completely changed the nature of the simulation, introducing variables like range, coordination, and lethality that push the scenario into military planning or fantasy territory.

Keeping it unarmed forced a more grounded design. It meant evaluating the biological limits of the human body — punch strength, stamina, coordination — against one of the most physically dominant land mammals on Earth. It also reinforced the absurdity of the question: what happens when 100 bare-handed humans confront a creature evolved to dominate its ecosystem through sheer muscle?

From a simulation standpoint, this constraint created an interesting design challenge. You can’t just give the humans better tools — you have to give them better positioning, smarter swarming, or just sheer numbers. That leads to a different kind of problem-solving: one rooted in movement mechanics, attrition, and probability rather than tactics or firepower.

Arena-Based, Physical Combat

To make the simulation tractable — and repeatable — I had to establish spatial and mechanical boundaries. That meant placing all combatants into a closed, gridded arena and modeling the fight as a sequence of discrete physical interactions.

The arena constraint serves several purposes:

- Limits the scope of chaos. In an open environment, 100 people could scatter, regroup, or avoid combat entirely. That might be realistic, but it’s impossible to simulate consistently. An enclosed space ensures that the conflict resolves.

- Enables movement logic. With a defined grid, agents (men or gorilla) can calculate paths, close distance, and prioritize targets. It’s the foundation for positioning-based strategy.

- Allows for fairness and iteration. Repeating the simulation hundreds of thousands of times means the environment must be stable. The arena becomes a neutral stage for the absurd drama to play out.

Importantly, this doesn’t mean the arena is abstract. I used realistic dimensions, randomized starting positions, and accounted for congestion and line-of-sight constraints. But it does mean that combat played out through modeled physicality: movement, proximity, engagement range, and attack resolution — all within the confines of a constrained field.

This decision was about managing complexity while still letting emergent behaviors arise. The humans could surround, block, and swarm. The gorilla could isolate, charge, or break through. The system was just bounded enough to be credible, but open enough to be interesting.

Average Adult Men, Globally Distributed

The phrase “100 average men” sounds simple — until you try to simulate it.

Early on, I had to define what “average” actually meant. Strength? Speed? Height? Training? To avoid cultural bias or cherry-picking, I used publicly available anthropometric and fitness data to approximate a global average adult male between the ages of 18 and 45.

The attributes were grounded in research:

- Height and weight from World Health Organization and CDC global averages

- Physical strength calibrated using grip strength studies and basic lifting benchmarks

- Endurance and speed loosely modeled on baseline military fitness requirements

- Combat skill assumed to be zero — no formal training, just instinct and crowd dynamics

Why global? Because any narrower definition (e.g., 100 American men, or 100 athletes) introduces distortion. This meme isn’t asking whether a fight team could win. It’s asking whether humanity’s average output of physical form and decision-making could overcome nature’s most overbuilt tank.

By making the sample population as demographically wide as possible, I avoided the temptation to stack the deck — and made the simulation more sociologically honest. That choice also gave the gorilla a fairer fight. These weren’t Navy SEALs or MMA fighters. Just 100 guys with average human capabilities… and no weapons.

Rules of Engagement and Baseline Assumptions

You can’t run a million battles without rules. Even chaos needs constraints.

I had to define exactly how these 100 men and 1 gorilla would interact. Not just for balance, but to ensure the results were consistent, interpretable, and repeatable. So I wrote up a Rules of Engagement (RoE) document — not unlike the kind you’d find in military simulations or tabletop games — to govern the fight mechanics.

Here’s what I locked in:

- No weapons allowed. This was hand-to-hand combat. No makeshift clubs, no rocks, no tools. Just fists, feet, and fur.

- Arena-based layout. All combatants spawn randomly in a bounded 2D grid — the “arena” — to force proximity and eventual engagement. This removed variables like terrain, hiding, or infinite kiting.

- Combat is immediate. No negotiations, no fear, no hesitation. Every entity is hard-coded to fight to the death. It’s a ridiculous premise — and a necessary simplification.

- Turn-based logic with real-time consequences. Each round, every agent acts based on speed and proximity. They move, strike, and respond according to simple behavioral rules.

Some additional constraints that may not be obvious:

- No respawns, no healing. Once a man goes down, he stays down. The same goes for the gorilla — though that takes a lot longer.

- No coordination advantages. The 100 men don’t strategize. They don’t form ranks, traps, or flanking maneuvers. At best, they mob. At worst, they trickle in and die.

- No morale loss. No one flees. No one plays dead. Everyone fights until they can’t.

Could I have added more nuance? Sure. But every additional rule increases complexity — and this wasn’t about simulating reality to the nth decimal. It was about simulating it fairly, consistently, and in a way that exposes the core dynamics of power, numbers, and biology.

Building the Simulation Stack

Why Rust?

I picked Rust for a few reasons, and none of them involved unnecessary suffering. Rust gave me what I needed:

- Performance: I was running a million simulations. I didn’t want to wait a week.

- Memory safety: I didn’t want to debug a thousand panics halfway through.

- Concurrency: I wanted to push each simulation into its own thread and let the CPU eat.

Also, to be honest, I wanted an excuse to work with Rust. This seemed like a good use case — bounded complexity, clear performance targets, and a contained but expandable problem domain.

System Design

To make this ridiculous idea work, I had to build a full simulation engine with just enough mechanics to matter. That meant:

- Agents: Every combatant, whether gorilla or man, is modeled as an Agent. Each has:

- Hit points (HP)

- Strength (STR)

- Speed (SPD)

- Defense (DEF)

- Damage (DMG)

- Critical chance (CRIT)

- Environment: Combatants spawn into a fixed-size grid. Positions are randomized. Movement and distance matter.

- Rounds: Every round, agents act in speed order. If they’re in range, they attack. If not, they move. Combat is resolved based on relative stats, with RNG baked in for hit chance and crits.

- End Condition: The battle runs until one side is fully eliminated — or 1000 rounds elapse.

There’s just enough logic to make it feel plausible — not enough to make it a full game. It’s a simulation, not a game engine.

Concurrency with Rayon

Yes, I parallelized the entire thing using Rayon. Every battle simulation runs in parallel using par_iter(), and Rust’s thread safety guarantees meant I didn’t have to worry about weird cross-battle state leaks. This is why I could run 1,000,000 battles across 10 batches in a matter of hours — not days.

It just worked.

Publishing the Code and Data

The full repo is published on GitHub at this link.

You’ll find:

- The Rust source code

- Character profiles with research

- Battle configuration with research (Rules of Engagement or RoE)

- All simulation data (1M battles)

- Output schemas for analysis

- Research from Gemini Deep Research:

- Comparative Study — Human Male vs Lowland Gorilla Characteristics

- Recommendations for Large-Scale Unarmed Combat Scenarios RoE

- Deconstructing the “100 Men vs. 1 Gorilla” Meme and Its Societal Reflections

If you want to rerun battles with different character profiles or change the arena logic, it’s all there. Tweak away.

The Tools that Made It Possible

ChatGPT – As a Logic and Design Partner

I used ChatGPT as a kind of systems architect on call. It helped me structure the simulation rules, refine the logic behind agent behavior, and pressure-test assumptions at every step. When I was figuring out how movement should work — like whether combatants should prioritize speed or proximity — ChatGPT helped map out options and tradeoffs. Same for hit mechanics: Should base accuracy be flat, or should it scale with relative speed? How do you prevent crit spam while still making it feel plausible?

I also used it for what I started calling “reality checks.” For example, when I caught myself trying to simulate group morale under stress, ChatGPT gently reminded me I was building a meme simulator, not a psychology model.

It wasn’t just an assistant. It was a collaborator — one that could model combat dynamics one minute and rewrite a paragraph the next.

Here are two examples that highlight how those interactions shaped critical parts of the project:

Designing Agent Movement

Prompt Summary:

“I’m trying to figure out how to simulate 100 humans realistically surrounding a gorilla. Should there be limits to how many agents can attack per round?”

Response Excerpt:

“The crowding mechanic emerges naturally if agents can’t occupy the same tile. This implicitly models why only a subset of the 100 can strike each round — not all of them can get close. That’s not a bug; it’s a feature.”

— ChatGPT, during movement logic refinement

This was a breakthrough moment that gave spatial logic in the arena a purpose beyond simple positioning.

Hit Chance and Combat Balance

Prompt Summary:

“How should I calculate melee hit chance between agents using SPD stats, while keeping things balanced?”

Response Excerpt:

“Base hit = 50% + (SPD difference × 5%) is a clean, adjustable mechanic. Clamp it to 5–95% to keep it realistic. Then overlay crits as a conditional multiplier.”

— ChatGPT, validating early combat math

That one-line formula became the backbone of the hit/crit system and scaled cleanly across all 1M battles.

Cursor – For Coordinating Rust Development

I didn’t hand-write most of the code — I orchestrated it.

The Rust codebase was co-developed by two AI agents: ChatGPT, acting as a systems logic partner, and Cursor, acting as an in-editor coding assistant. I was the conductor, supervising, debugging, and validating their work as the simulation stack came together.

Cursor was especially helpful in the flow-state work of implementation. I’d highlight a function stub, describe its intent, and Cursor would fill in the logic — often correctly. When it didn’t, I’d tune the prompts or pivot to ChatGPT for a higher-level take. The combo allowed me to rapidly prototype complex logic without getting buried in syntax or compiler edge cases.

For example:

- Cursor scaffolded the CLI and built out the core Agent methods (like movement and targeting).

- It helped integrate Rayon for parallel simulation runs — resolving lifetime issues I barely understood at the time.

- When the compiler screamed, I let Cursor take the first swing before stepping in.

This wasn’t “learning Rust” the traditional way. It was a different mode of software engineering — supervising autonomous agents that build while you think ahead.

Gemini / Deep Research – To Explore RoE Systems, Combat Design, and Stat Modeling

The Rust code might be the engine of this simulation, but the fuel was knowledge — and for that, I used Google Gemini’s Deep Research mode. This wasn’t just about finding a few quick answers. This was about understanding the principles of combat simulation, the statistical ranges for character attributes, and the psychological elements that might impact a group’s behavior in a fight.

Gemini allowed me to research multiple aspects of the simulation rapidly:

- Combat Mechanics: I needed to understand not just basic attack and defense calculations, but how different systems approach critical hits, dodge chances, and damage scaling. I explored everything from tabletop RPG rules to fighting game mechanics, all synthesized into a coherent model for the simulator.

- Stat Balancing: Creating a realistic gorilla was easy — just make it overwhelmingly strong. But building the average “100 men” was far trickier. I needed to ensure their stats were not just realistic in isolation but also plausible as a collective force. Gemini helped me explore human performance data, including average strength, endurance, and pain tolerance for adults globally.

- Rules of Engagement (RoE): Before the first line of code was written, I had to establish what “fair” meant in this context. Gemini helped me study and analyze various RoE models — from ancient gladiatorial combat to modern martial arts. This informed how I structured the arena, movement, and the behaviors of each side.

Example Callout – Researching Rules of Engagement (RoE)

Prompt: “What are some established Rules of Engagement (RoE) frameworks that could be applied to a combat scenario simulation?”

Gemini’s Response: “Common RoE frameworks include military protocols (escalation of force, proportional response), martial arts competition rules (legal strike zones, fouls), and historical gladiatorial guidelines (types of permissible weapons, fight to submission or death). Depending on your simulation context, you could establish RoE that govern allowable actions, win conditions, and ethical limits.”

- Tactical Dynamics: I wasn’t just interested in how the fight would unfold physically — I wanted to know how humans might behave in this scenario. Would they swarm? Would they retreat if overwhelmed? Would they sacrifice each other to maximize damage? Gemini’s vast resource pool helped me refine plausible behaviors, even if the final implementation was necessarily simplified.

Using Gemini wasn’t just about saving time. It was about achieving a level of depth in the simulation that would have been impossible otherwise. I could go from a question — “What are the average pain tolerance thresholds for adult men?” — to a solid answer in seconds.

And because I could ask follow-up questions directly, my research didn’t just stack facts; it built understanding.

GitHub – For Publishing and Collaboration

Once the code was functional and the simulation was running reliably, I knew I wanted to share it — both the code itself and the data it generated. GitHub was the obvious choice.

I set up a public repository where anyone can view the Rust code, the simulation configuration, the research papers I referenced, and the complete dataset of one million battle outcomes. For developers, the code is fully accessible, along with clear instructions for running their own simulations or modifying the combatants. For analysts, the raw data provides a massive playground for anyone interested in further statistical exploration.

But beyond just sharing, GitHub served another purpose: version control. With a project like this, especially one that evolved rapidly, being able to track changes, revert mistakes, and document updates was invaluable.

Here’s what’s available in the GitHub repository:

- Source Code: The complete Rust project, including all modules and dependencies.

- Simulation Data: A CSV file containing the results of all one million simulations, including battle IDs, winner outcomes, round counts, and critical metrics for each fight.

- Configuration Files: Combatant profiles for the 100 men and the gorilla, defined in JSON format, which makes them easily editable.

- Research Papers: Two research papers included in the /research folder, providing background on combat mechanics and game theory.

- Documentation: A README file explaining the project, its purpose, how to use it, and the underlying logic of the simulation.

A Peek at the Simulation Data

{

“battle_id”: 0,

“winner”: “Solo”,

“rounds”: 61,

“group_casualties”: 5,

“solo_survived”: true,

“context”: {

“location_name”: “Oslo_7944”,

“country”: “Norway”,

“latitude”: 60.8351,

“longitude”: 12.1318,

“climate”: “Continental”,

“weather”: “Overcast”,

“is_day”: false

},

“causal”: {

“total_critical_hits”: 5,

“group_avg_damage”: 1.84,

“max_group_damage”: 81.0,

“solo_end_hp”: 116,

“rounds_engaged”: 22,

“solo_final_blow”: false

}

}

Example Analysis Summary

== Analysis Summary ==

Total Battles: 100,000

Wins:

– Group: 0.1%

– Solo: 99.9%

Average Group Casualties: 3.6

Average Rounds: 39.3

Climate Breakdown:

– Temperate: 40.0%

– Continental: 30.0%

– Tropical: 10.0%

– Polar: 20.0%

Weather Breakdown:

– Clear: 20.0%

– Snow: 30.0%

– Cloudy: 20.0%

– Windy: 10.0%

– Rain: 10.0%

– Storm: 10.0%

Day/Night:

– Day: 70.0%

– Night: 30.0%

By making the project open-source (MIT License), I’ve invited the internet to test it, challenge it, and even modify it. If someone wants to tweak the stats, add new combatants, or design an entirely different scenario, they have a ready-made framework to build on.

And as a bonus, GitHub’s issue tracking means I can document any bugs or unexpected behavior as the project continues to evolve. If you’re curious, you can clone the repository, run the simulations, and even modify the combatants or rules to see how they affect the outcomes. Feel free to fork the repo, submit issues if you find any bugs, or even open a pull request if you think you can improve the code.

Explore the full code, data set, and research materials here: GitHub Repository

Terminal + Cargo – For Build, Test, and Batch Run Automation

Every battle in this simulation was executed directly from the terminal, using Rust’s Cargo toolchain. This setup wasn’t just for convenience — it was critical for performance and scalability. Cargo, Rust’s package manager and build system, allowed me to easily compile, test, and execute the simulation with a single command.

But this wasn’t just a one-off. With Rayon (Rust’s parallelism library) integrated into the simulation code, I could leverage multi-core processing to run tens of thousands of battles simultaneously. This meant that instead of running one battle at a time — which would have taken days — I could simulate one million battles in minutes.

How It Worked

- Compilation: With a single command:

Command:

cargo build –release

- Execution: Running the simulation with batch control:

Command:

cargo run –release — simulate 100000 1 Man Gorilla 100 1

Explanation:

100000 battles per batch.

1 for batch ID (helpful for organization).

Man and Gorilla as the combatants.

100 men vs. 1 gorilla.

- Parallel Processing with Rayon: The simulation was set up to leverage all available CPU cores using Rayon. This meant that every core was executing separate battles, accelerating the total runtime.

- Data Export: Each batch of simulations generated a CSV file, which I could immediately review or use for analysis.

Why Parallelism (Rayon) Was Critical

- Without Rayon, each battle would have been processed sequentially, dramatically increasing the time required.

- With Rayon, each core was independently running battles, completing batches of 100,000 in minutes.

- This approach scaled perfectly with my hardware (M3 MacBook Pro).

Example Workflow

Code changes? Recompile with:

cargo build –release

- Need a quick test run? Simulate a small batch:

cargo run –release — simulate 1000 1 Man Gorilla 100 1 - Ready to go full send? Launch a full batch:

cargo run –release — simulate 100000 1 Man Gorilla 100 1 - Results ready? Analyze them directly from the terminal:

cargo run –release — analyze results_1.csv - Results ready? Analyze them directly from the terminal:

cargo run –release — analyze results_1.csv

Why This Setup Matters

This setup wasn’t just about speed — it was about control and the ability to test rapidly. By using Cargo and the terminal, I could:

- Modify combatants or rules without any GUI overhead.

- Run massive simulations without crashing my system.

- Immediately see results and adjust based on findings.

This stack made the difference between a fun experiment and a fully operational combat simulation system.

What I Learned from 1,000,000 Battle Simulations

After running a million simulated battles between 100 average men and a single silverback gorilla, the results are both predictable and revealing. The data paints a picture of raw dominance, brief struggles, and the limits of human strength — even in overwhelming numbers.

Win/Loss Ratio

The gorilla won 98.94% of the battles, leaving the group of 100 men with a win rate of just 1.06%. That’s not even a fighting chance — it’s a statistical rounding error. The rare few instances where the men emerged victorious were outliers, achieved only through an unlikely mix of coordination, luck, and sheer persistence.

Casualty Patterns

On average, the human group suffered 4.1 casualties, even in battles they won. This means that even in their rare victories, multiple men did not survive. The gorilla’s resilience was so high that it consistently inflicted serious losses, even against the few groups that managed to bring it down.

Round Duration

Most battles ended in roughly 38.49 rounds, making these skirmishes quick but not instant. In practical terms, that means a typical battle lasted just long enough for the gorilla to pick apart the attacking humans. The balance here is telling: The men’s numbers allowed them to last multiple rounds, but the gorilla’s overwhelming strength and speed ultimately decided the outcome.

Environmental Influence

The simulation’s randomized environments added an extra layer of unpredictability:

- Temperate climates were the most common, making up 36.24% of battles.

- Arid (24%) and Continental (23.98%) environments were also common.

- Polar climates (5.01%) and Tropical (10.77%) conditions were the least frequent.

Weather varied widely, with Clear (24.98%) and Rain (17.69%) being the most frequent, while Blizzard (1.24%) and Fog (1.28%) were rare.

Interestingly, the time of day was almost perfectly balanced between Day (50.03%) and Night (49.97%), making it clear that darkness and light had no appreciable impact on the outcome.

Outlier Scenarios

In the extremely rare cases where the human group won, it wasn’t due to any single heroic act but rather a convergence of favorable conditions:

- The humans managed to swarm the gorilla, exploiting its limited range of motion.

- Luck played a significant role, with a few critical hits landing at key moments.

- Poor environmental conditions — like slippery terrain — may have briefly neutralized the gorilla’s speed advantage.

Emergent Behavior

Beyond the raw statistics, the simulation also surfaced a few interesting patterns:

- Battles where the men won tended to have higher casualty counts and longer durations, suggesting that their success was the result of a grueling war of attrition.

- When men won, it was often because they managed to stagger the gorilla, using sheer numbers to continuously harass and distract it.

- The gorilla’s overwhelming strength often led to scenes of utter chaos — entire groups wiped out in seconds due to rapid, brutal attacks.

- In the rare cases where the men won, they were often forced to sacrifice dozens of their number to create an opening.

The simulated conflict reveals a fundamental truth: Numbers alone are not enough. Without tactics, coordination, or technological advantage, even a hundred humans are outmatched by one of nature’s most powerful creatures.

But of course, that’s the nature of a meme. It’s a scenario designed for argument, not reason. And yet, by simulating it, we’ve forced the absurd into a kind of logical order — even if that order is mostly a massacre.

But Also… Why Are We Like This?

At first glance, the “100 men vs. 1 gorilla” meme seems like a harmless, even comical, exercise in absurd hypothetical debate. But when you look a little closer, it reveals a lot more about us — about our culture, our psychology, and our comfort with violence as a spectacle.

An Ancient Fascination with Conflict:

Humanity has always had a strange fascination with conflict. From gladiatorial combat in ancient Rome to modern sports, we have repeatedly found ways to transform life-and-death struggles into entertainment. The “100 men vs. 1 gorilla” meme is just another expression of this tendency, a safe, digital arena where we can explore ideas of dominance, strength, and survival without any real consequence.

Hubris and Human Exceptionalism:

A common theme in the online debates is the consistent underestimation of the gorilla. Arguments in favor of “Team 100 Men” often hinge on the idea that human intelligence, teamwork, or sheer numbers can overcome even the most formidable of natural obstacles. But beneath this confidence is a form of hubris — a belief in human supremacy over nature. We like to imagine that, with enough planning, even our most primal instincts can conquer the raw, unrestrained power of the natural world.

Masculinity, Strength, and the Performance of Bravado:

The question is explicitly framed as “100 men vs. 1 gorilla,” which immediately ties the debate to notions of masculinity. The insistence by some participants that the men could — or even should — win is often framed as a matter of pride. This is not just a question of who would win, but of who should win — and what it means if they don’t. In some ways, it becomes a digital proving ground, where commenters get to perform a kind of armchair heroism, confidently outlining strategies and expressing their own (often exaggerated) beliefs about toughness, strength, and dominance.

Comfort with Violence as a Spectacle:

Perhaps the most unsettling aspect of the meme is how easily we turn the question of a bloody, life-or-death battle into a lighthearted conversation. The hypothetical nature of the scenario seems to justify this. It’s not real, so it’s okay to speculate, to joke, to strategize. But is it really that simple? Does our willingness to engage with such a brutal idea — even in jest — suggest something darker about us? Or is this just a safe outlet for exploring human nature and animal instincts, free from consequence?

The Ethics of the Hypothetical:

Some have pointed out that the meme is, at its core, a question that trivializes violence against animals. Even though the gorilla is not real, the scenario reduces it to a mere obstacle, an object to be overcome. In a world where actual gorillas are endangered, struggling against human encroachment and exploitation, the meme might even be seen as a reflection of our careless attitude toward wildlife and nature.

Yet, there is also a counterargument — that the meme, in its absurdity, brings attention to gorillas as a species. That it has sparked a level of cultural interest that could be redirected toward conservation efforts or education about the real lives of these incredible creatures.

So, Why Are We Like This?

The “100 men vs. 1 gorilla” meme is a cultural Rorschach test. It tells us as much about ourselves as it does about the hypothetical scenario. It highlights our fascination with violence, our belief in human superiority, our strange relationship with masculinity, and our willingness to laugh at even the most brutal ideas — as long as they stay hypothetical.

Final Thoughts

If you had told me when this started that I would end up simulating 100 men vs. a gorilla one million times, I might have laughed — but here we are. And what started as a playful dive into a viral meme ended up as something more: a study in human nature, technical problem-solving, and the strange cultural intersection of logic, ethics, and humor.

The “100 men vs. 1 gorilla” meme is more than just an internet joke — it’s a micro-society. It’s a mindspace where assumptions about strength, strategy, and survival get tested, where confidence meets reality, and where the absurd becomes a stage for very real debates about masculinity, violence, and our place in the natural world.

In building this simulation, I had to consider everything from character stats and combat mechanics to ethical questions about why we even find this idea entertaining. I learned about Rust, AI-assisted coding, and the nuanced ways a simple question can reflect complex human values.

If you’re reading this, you can fork the repo, tweak the variables, and run your own battles. Want to see if a different RoE setup gives the men a better chance? Curious if a different stat distribution for the gorilla might change the outcome? The code, the data, and the tools are all there. Your version of the simulation might reveal something I missed — or lead to new questions altogether.

Because that’s the real magic of technical play: it lets you take something ridiculous, something hypothetical, and turn it into a playground for logic, creativity, and even self-reflection.

Maybe the men never win. But maybe the real victory is realizing why that’s such a compelling question in the first place.

Be Kind to the Real Gorillas

If this article made you think, laugh, or question something, consider making a real difference. The Dian Fossey Gorilla Fund International works tirelessly to protect gorillas and their habitats. Your support can help ensure these incredible animals thrive in the wild.